2.8 - Weights initialization

Contents

2.8 - Weights initialization¶

!wget -nc --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/2021.deeplearning/main/content/init.py

import init; init.init(force_download=False);

follow the explanation here¶

https://adventuresinmachinelearning.com/weight-initialization-tutorial-tensorflow/

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from IPython.display import Image

%matplotlib inline

import tensorflow as tf

tf.__version__

'2.4.0'

mnist = pd.read_csv("local/data/mnist1.5k.csv.gz", compression="gzip", header=None).values

X=mnist[:,1:785]/255.

y=mnist[:,0]

print("dimension de las imagenes y las clases", X.shape, y.shape)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.2)

X_train = X_train

X_test = X_test

y_train_oh = np.eye(10)[y_train]

y_test_oh = np.eye(10)[y_test]

print(X_train.shape, y_train_oh.shape)

dimension de las imagenes y las clases (1500, 784) (1500,)

(1200, 784) (1200, 10)

load data and train a simple model¶

from tensorflow.keras import Sequential, Model

from tensorflow.keras.layers import Dense, Dropout, Flatten, concatenate, Input

from tensorflow.keras.backend import clear_session

from tensorflow import keras

import tensorflow as tf

import tensorflow.keras.backend as K

tf.keras.backend.set_floatx('float32')

def get_model(input_dim=784, output_dim=10, layer_sizes=[10]*6, activation="relu", sigma=1):

model = Sequential()

init1k = keras.initializers.RandomNormal(mean=.0, stddev=sigma, seed=None)

init1b = keras.initializers.RandomNormal(mean=.0, stddev=sigma, seed=None)

model.add(Dense(layer_sizes[0], activation=activation, input_dim=input_dim, name="Layer_%02d_Input"%(0),

kernel_initializer=init1k,

bias_initializer=init1b,

dtype=tf.float64

))

for i, hsize in enumerate(layer_sizes[1:]):

model.add(Dense(hsize, activation=activation, name="Layer_%02d_Hidden"%(i+1), dtype=tf.float64))

model.add(Dense(output_dim, activation="softmax", name="Layer_%02d_Output"%(len(layer_sizes)), dtype=tf.float64))

model.compile(optimizer='adam', loss=tf.keras.losses.categorical_crossentropy, metrics=['accuracy'])

model.reset_states()

return model

def get_gradients_functions(model):

T_input = model.input

T_outputs = [layer.output for layer in model.layers]

T_weights = model.trainable_weights

T_outputs = [layer.output for layer in model.layers]

F_outputs = [K.function([T_input], [out]) for out in T_outputs]

def get_gradients_functions(model):

r = []

for i in range(len(model.trainable_variables)):

def f(X,y,i=eval("i")):

v = model.trainable_variables[i]

with tf.GradientTape(persistent=True) as t:

loss = model.loss( model(X), y)

return t.gradient(loss,v).numpy()

r.append(f)

return r

F_gradients = get_gradients_functions(model)

return T_input, T_outputs, T_weights, F_outputs, F_gradients

model = get_model(layer_sizes=[20,15,15], activation="sigmoid", sigma=20)

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Layer_00_Input (Dense) (None, 20) 15700

_________________________________________________________________

Layer_01_Hidden (Dense) (None, 15) 315

_________________________________________________________________

Layer_02_Hidden (Dense) (None, 15) 240

_________________________________________________________________

Layer_03_Output (Dense) (None, 10) 160

=================================================================

Total params: 16,415

Trainable params: 16,415

Non-trainable params: 0

_________________________________________________________________

T_input, T_outputs, T_weights, F_outputs, F_gradients = get_gradients_functions(model)

T_outputs

[<KerasTensor: shape=(None, 20) dtype=float64 (created by layer 'Layer_00_Input')>,

<KerasTensor: shape=(None, 15) dtype=float64 (created by layer 'Layer_01_Hidden')>,

<KerasTensor: shape=(None, 15) dtype=float64 (created by layer 'Layer_02_Hidden')>,

<KerasTensor: shape=(None, 10) dtype=float64 (created by layer 'Layer_03_Output')>]

model.get_config()

{'name': 'sequential',

'layers': [{'class_name': 'InputLayer',

'config': {'batch_input_shape': (None, 784),

'dtype': 'float64',

'sparse': False,

'ragged': False,

'name': 'Layer_00_Input_input'}},

{'class_name': 'Dense',

'config': {'name': 'Layer_00_Input',

'trainable': True,

'batch_input_shape': (None, 784),

'dtype': 'float64',

'units': 20,

'activation': 'sigmoid',

'use_bias': True,

'kernel_initializer': {'class_name': 'RandomNormal',

'config': {'mean': 0.0, 'stddev': 20, 'seed': None}},

'bias_initializer': {'class_name': 'RandomNormal',

'config': {'mean': 0.0, 'stddev': 20, 'seed': None}},

'kernel_regularizer': None,

'bias_regularizer': None,

'activity_regularizer': None,

'kernel_constraint': None,

'bias_constraint': None}},

{'class_name': 'Dense',

'config': {'name': 'Layer_01_Hidden',

'trainable': True,

'dtype': 'float64',

'units': 15,

'activation': 'sigmoid',

'use_bias': True,

'kernel_initializer': {'class_name': 'GlorotUniform',

'config': {'seed': None}},

'bias_initializer': {'class_name': 'Zeros', 'config': {}},

'kernel_regularizer': None,

'bias_regularizer': None,

'activity_regularizer': None,

'kernel_constraint': None,

'bias_constraint': None}},

{'class_name': 'Dense',

'config': {'name': 'Layer_02_Hidden',

'trainable': True,

'dtype': 'float64',

'units': 15,

'activation': 'sigmoid',

'use_bias': True,

'kernel_initializer': {'class_name': 'GlorotUniform',

'config': {'seed': None}},

'bias_initializer': {'class_name': 'Zeros', 'config': {}},

'kernel_regularizer': None,

'bias_regularizer': None,

'activity_regularizer': None,

'kernel_constraint': None,

'bias_constraint': None}},

{'class_name': 'Dense',

'config': {'name': 'Layer_03_Output',

'trainable': True,

'dtype': 'float64',

'units': 10,

'activation': 'softmax',

'use_bias': True,

'kernel_initializer': {'class_name': 'GlorotUniform',

'config': {'seed': None}},

'bias_initializer': {'class_name': 'Zeros', 'config': {}},

'kernel_regularizer': None,

'bias_regularizer': None,

'activity_regularizer': None,

'kernel_constraint': None,

'bias_constraint': None}}]}

T_input, T_outputs, T_weights, F_outputs, F_gradients = get_gradients_functions(model)

scale_X=.2

shift_X =.5

!rm -rf log

tb_callback = keras.callbacks.TensorBoard(log_dir='./log/winit', histogram_freq=1, write_grads=True, write_graph=True, write_images=True)

model.fit((X_train-shift_X)*scale_X, y_train_oh, epochs=30, batch_size=32,

validation_data=((X_test-shift_X)*scale_X, y_test_oh),

)#callbacks=[tb_callback])

WARNING:tensorflow:`write_grads` will be ignored in TensorFlow 2.0 for the `TensorBoard` Callback.

Epoch 1/30

38/38 [==============================] - 1s 7ms/step - loss: 2.3824 - accuracy: 0.0700 - val_loss: 2.3389 - val_accuracy: 0.0633

Epoch 2/30

38/38 [==============================] - 0s 2ms/step - loss: 2.3160 - accuracy: 0.0903 - val_loss: 2.3143 - val_accuracy: 0.1200

Epoch 3/30

38/38 [==============================] - 0s 2ms/step - loss: 2.3025 - accuracy: 0.1276 - val_loss: 2.3052 - val_accuracy: 0.1200

Epoch 4/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2956 - accuracy: 0.1366 - val_loss: 2.2995 - val_accuracy: 0.1200

Epoch 5/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2911 - accuracy: 0.1292 - val_loss: 2.2965 - val_accuracy: 0.1200

Epoch 6/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2911 - accuracy: 0.1159 - val_loss: 2.2925 - val_accuracy: 0.1200

Epoch 7/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2899 - accuracy: 0.1176 - val_loss: 2.2902 - val_accuracy: 0.1200

Epoch 8/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2863 - accuracy: 0.1247 - val_loss: 2.2873 - val_accuracy: 0.1200

Epoch 9/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2796 - accuracy: 0.1328 - val_loss: 2.2833 - val_accuracy: 0.1200

Epoch 10/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2807 - accuracy: 0.1299 - val_loss: 2.2783 - val_accuracy: 0.1233

Epoch 11/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2792 - accuracy: 0.1330 - val_loss: 2.2745 - val_accuracy: 0.1267

Epoch 12/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2701 - accuracy: 0.1386 - val_loss: 2.2710 - val_accuracy: 0.1233

Epoch 13/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2613 - accuracy: 0.1528 - val_loss: 2.2634 - val_accuracy: 0.1533

Epoch 14/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2551 - accuracy: 0.1687 - val_loss: 2.2570 - val_accuracy: 0.1867

Epoch 15/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2501 - accuracy: 0.1962 - val_loss: 2.2477 - val_accuracy: 0.2100

Epoch 16/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2346 - accuracy: 0.2027 - val_loss: 2.2394 - val_accuracy: 0.2200

Epoch 17/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2299 - accuracy: 0.2420 - val_loss: 2.2276 - val_accuracy: 0.2400

Epoch 18/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2141 - accuracy: 0.2739 - val_loss: 2.2158 - val_accuracy: 0.2367

Epoch 19/30

38/38 [==============================] - 0s 2ms/step - loss: 2.2075 - accuracy: 0.2595 - val_loss: 2.2004 - val_accuracy: 0.2633

Epoch 20/30

38/38 [==============================] - 0s 2ms/step - loss: 2.1874 - accuracy: 0.2863 - val_loss: 2.1857 - val_accuracy: 0.2733

Epoch 21/30

38/38 [==============================] - 0s 2ms/step - loss: 2.1739 - accuracy: 0.2888 - val_loss: 2.1679 - val_accuracy: 0.2867

Epoch 22/30

38/38 [==============================] - 0s 2ms/step - loss: 2.1684 - accuracy: 0.2844 - val_loss: 2.1502 - val_accuracy: 0.2833

Epoch 23/30

38/38 [==============================] - 0s 2ms/step - loss: 2.1453 - accuracy: 0.2817 - val_loss: 2.1315 - val_accuracy: 0.2933

Epoch 24/30

38/38 [==============================] - 0s 2ms/step - loss: 2.1047 - accuracy: 0.3103 - val_loss: 2.1106 - val_accuracy: 0.2967

Epoch 25/30

38/38 [==============================] - 0s 2ms/step - loss: 2.0978 - accuracy: 0.2924 - val_loss: 2.0893 - val_accuracy: 0.2933

Epoch 26/30

38/38 [==============================] - 0s 2ms/step - loss: 2.0690 - accuracy: 0.3182 - val_loss: 2.0703 - val_accuracy: 0.2933

Epoch 27/30

38/38 [==============================] - 0s 2ms/step - loss: 2.0508 - accuracy: 0.3184 - val_loss: 2.0510 - val_accuracy: 0.2967

Epoch 28/30

38/38 [==============================] - 0s 2ms/step - loss: 2.0495 - accuracy: 0.2791 - val_loss: 2.0312 - val_accuracy: 0.3000

Epoch 29/30

38/38 [==============================] - 0s 2ms/step - loss: 2.0039 - accuracy: 0.3155 - val_loss: 2.0111 - val_accuracy: 0.3033

Epoch 30/30

38/38 [==============================] - 0s 2ms/step - loss: 1.9814 - accuracy: 0.3261 - val_loss: 1.9950 - val_accuracy: 0.3067

<tensorflow.python.keras.callbacks.History at 0x7ff7019169d0>

Effects of different initializations¶

understand carefully the following function

check the notebook on Inspecting model internals to understand get_tensors_and_functions and the objects it returns

def train_experiment(model, sigma, X_train, X_test):

model = get_model(layer_sizes=[20,15,15], activation="sigmoid", sigma=sigma)

T_input, T_outputs, T_weights, F_outputs, F_gradients = get_gradients_functions(model)

w0_before = model.get_weights()[0].reshape(-1)

o0_before = F_outputs[0]([X_train])[0].reshape(-1)

g0_before = F_gradients[0](X_train, y_train_oh).reshape(-1)

model.fit(X_train, y_train_oh, epochs=30, batch_size=32,

validation_data=(X_test, y_test_oh), verbose=0)

w0_after = model.get_weights()[0].reshape(-1)

o0_after = F_outputs[0]([X_train])[0].reshape(-1)

g0_after = F_gradients[0](X_train, y_train_oh).reshape(-1)

acc, val_acc = model.history.history["accuracy"], model.history.history["val_accuracy"]

plt.figure(figsize=(20,3))

plt.subplot(141)

plt.plot(acc, label="train_acc")

plt.plot(val_acc, label="test_acc")

plt.legend();

plt.grid()

plt.title("sigma=%.2f"%(sigma))

plt.xlabel("epoch")

plt.subplot(142)

plt.hist(w0_after, bins=30, density=True, label="after", alpha=.5);

plt.hist(w0_before, bins=30, density=True, label="before", alpha=.5);

plt.legend();

plt.title("layer 0 weights")

plt.subplot(143)

plt.hist(o0_after, bins=30, density=True, label="after", alpha=.5);

plt.hist(o0_before, bins=30, density=True, label="before", alpha=.5);

plt.legend();

plt.title("layer 0 outputs")

plt.subplot(144)

def get_percentile(k, perc=90):

p = np.percentile(np.abs(k), [perc])[0]

return k[(k>-p)&(k<p)]

plt.hist(get_percentile(g0_after), bins=30, density=True, label="after", alpha=.5);

plt.hist(get_percentile(g0_before), bins=30, density=True, label="before", alpha=.5);

plt.legend();

plt.title("layer 0 gradients")

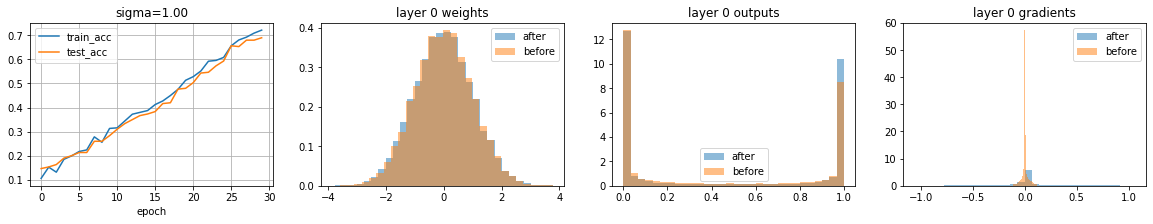

initializing with a standard normal (\(\mu=0\) and \(\sigma=1\))¶

histograms show weights, outputs and gradients before and after training

in good configurations:

weights move during training

gradients are spread around zero before training

outputs before training are spread

model = get_model(layer_sizes=[20,15,15], activation="sigmoid", sigma=0.1)

model.outputs[0]

<KerasTensor: shape=(None, 10) dtype=float64 (created by layer 'Layer_03_Output')>

model.loss

<function tensorflow.python.keras.losses.categorical_crossentropy(y_true, y_pred, from_logits=False, label_smoothing=0)>

train_experiment(model, sigma=1, X_train=X_train, X_test=X_test)

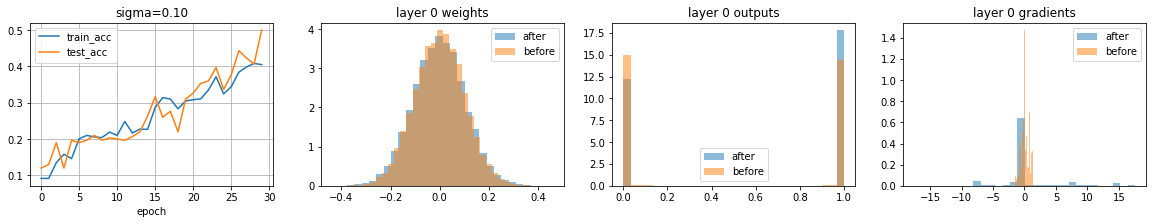

initializing with a small \(\sigma\)¶

train_experiment(model, sigma=.1, X_train=X_train, X_test=X_test)

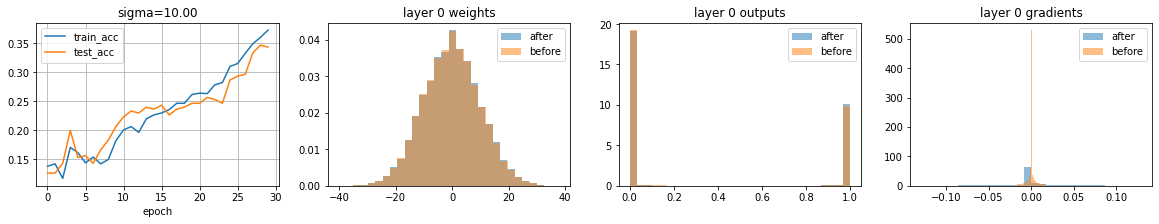

initializing with a large \(\sigma\)¶

observe how gradients are very concentrated arounz zero at the beginning of training

train_experiment(model, sigma=10, X_train=X_train, X_test=X_test)

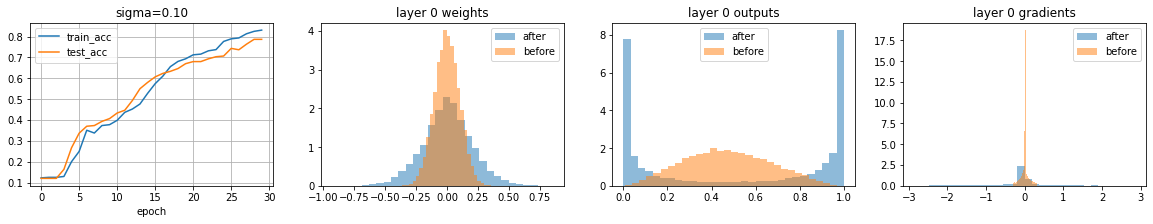

initializing with a small \(\sigma\) but with large values for input data¶

Recall that \(XW+b\) is what enters the \(sigmoid\) function. If large, it will be away from the linear regine around zero. It can be large because of \(W\) (large initialization \(\sigma\)), or because of \(X\).

train_experiment(model, sigma=.1, X_train=X_train*100-50, X_test=X_test*100-50)