LAB 4.1 - Convolutions

Contents

LAB 4.1 - Convolutions¶

!wget -nc --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/2021.deeplearning/main/content/init.py

import init; init.init(force_download=False);

from local.lib.rlxmoocapi import submit, session

session.LoginSequence(endpoint=init.endpoint, course_id=init.course_id, lab_id="L04.01", varname="student");

import numpy as np

import os

import tensorflow as tf

import matplotlib.pyplot as plt

%matplotlib inline

tf.__version__

TASK 1: Implement convolutions¶

Complete the following function so that it implements a convolution in a set of for loops. YOU CANNOT USE TENSORFLOW

The parameters of the function are as follow:

img: the images, an array of size [1,y,x,k], where:1: you will be receiving only one imagex,y: the size of the imagek: the number of channels

f: the filters, an array of size [fy,fx,k,n], where:fx,fy: the size of the filtersk: the number of channels (must be the same as in images)n: the number of filters

activation: the activation function to use (such assigmoidorlinearas shown below)

the resulting activation map must be of shape [1, x-fx+1, y-fy+1, n] which is equivalent to a Conv2D Keras layer with padding='VALID'

sigmoid = lambda x: 1/(1+np.exp(-x))

linear = lambda x: x

def convolution_byhand(img, f, activation=sigmoid):

assert f.shape[2]==img.shape[3]

fy = f.shape[0]

fx = f.shape[1]

r = np.zeros( (1, img.shape[1]-fy+1, img.shape[2]-fx+1, f.shape[3] ))

for filter_nb in range(f.shape[3]):

for y in range(... # YOUR CODE HERE):

for x in range(... # YOUR CODE HERE):

r[0,y,x,filter_nb] = ... # YOUR CODE HERE

return r

with the following 5x5x1 image and 2 filters of size 3x3x1 you should get this result

>> convolution_byhand(img, f, activation=sigmoid)

array([[[[0.7109495 , 0.37754067],

[0.40131234, 0.35434369],

[0.40131234, 0.40131234]],

[[0.450166 , 0.5 ],

[0.59868766, 0.40131234],

[0.2890505 , 0.42555748]],

[[0.19781611, 0.64565631],

[0.81757448, 0.75026011],

[0.2890505 , 0.66818777]]]])

img = np.r_[[[0, 8, 5, 9, 7],

[0, 6, 4, 6, 9],

[6, 3, 8, 4, 5],

[8, 7, 7, 4, 5],

[7, 2, 5, 0, 2]]].reshape((1,5,5,1))/10 - 0.5

f = np.r_[[-1., -1., 1., -1., -1., -1., -1., 1., 1.],

[ 1., -1., 1., -1., -1., 1., -1., -1., -1.]].reshape(3,3,1,2)

convolution_byhand(img, f, activation=sigmoid)

which should yield the same result as simply applying a keras Conv2D layer

c = tf.keras.layers.Conv2D(filters=f.shape[-1], kernel_size=f.shape[:2], activation="sigmoid", padding='VALID', dtype=tf.float64)

c.build(input_shape=(1,7,7,f.shape[2])) # any shape would do here, just initializing weights

c.set_weights([f, np.zeros(2)])

c(img).numpy()

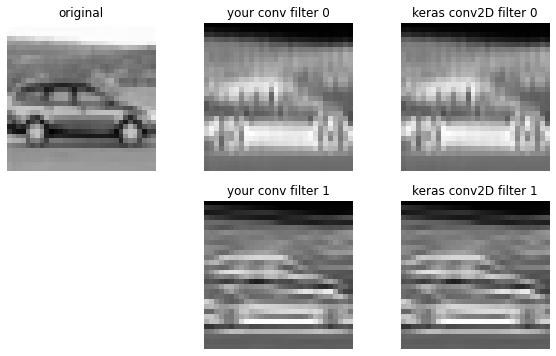

you can also check the effect of the filters in a sample image

from skimage import io

img = io.imread("local/imgs/sample_img.png")

img = img.reshape(1,*img.shape, 1)

img = (img-np.min(img))/(np.max(img)-np.min(img))

a1 = convolution_byhand(img, f)

a2 = c(img).numpy()

plt.figure(figsize=(10,6))

plt.subplot(231); plt.imshow(img[0,:,:,0], cmap=plt.cm.Greys_r); plt.axis("off"); plt.title("original")

plt.subplot(232); plt.imshow(a1[0,:,:,0], cmap=plt.cm.Greys_r); plt.axis("off"); plt.title("your conv filter 0")

plt.subplot(233); plt.imshow(a2[0,:,:,0], cmap=plt.cm.Greys_r); plt.axis("off"); plt.title("keras conv2D filter 0")

plt.subplot(235); plt.imshow(a1[0,:,:,1], cmap=plt.cm.Greys_r); plt.axis("off"); plt.title("your conv filter 1")

plt.subplot(236); plt.imshow(a2[0,:,:,1], cmap=plt.cm.Greys_r); plt.axis("off"); plt.title("keras conv2D filter 1");

Registra tu solución en linea

student.submit_task(namespace=globals(), task_id='T1');

TASK 2: Prepare image for one-shot convolution¶

We will prepare images to do the convolution with one dot product operation for each filter and each image. This will use more memory but will increase performance, specially if we have many filters.

For instance, assume we have the following 1x8x6x1 images (only one image, one channel) and 2x3x1x2 filters (one channel, two filters)

img = np.r_[[9, 4, 9, 6, 7, 1, 2, 2, 8, 0, 8, 6, 8, 6, 5, 5, 1, 4, 3, 4, 4, 4,

3, 6, 5, 1, 7, 9, 1, 4, 0, 3, 1, 4, 3, 5, 1, 5, 5, 4, 9, 6, 3, 2,

8, 9, 0, 6]].reshape(1,8,6,1)

f = np.r_[[6, 7, 8, 5, 2, 9, 6, 4, 9, 7, 9, 7]].reshape(2,3,1,2)

print ("images", img.shape)

print (img[0,:,:,0])

print ("--")

print ("filters", f.shape)

print (f[:,:,0,0])

print (f[:,:,0,1])

observe that if we repeat and rearrange img in the following way

pimg = np.array([[[[9., 4., 9., 2., 2., 8.],

[4., 9., 6., 2., 8., 0.],

[9., 6., 7., 8., 0., 8.],

[6., 7., 1., 0., 8., 6.]],

[[2., 2., 8., 8., 6., 5.],

[2., 8., 0., 6., 5., 5.],

[8., 0., 8., 5., 5., 1.],

[0., 8., 6., 5., 1., 4.]],

[[8., 6., 5., 3., 4., 4.],

[6., 5., 5., 4., 4., 4.],

[5., 5., 1., 4., 4., 3.],

[5., 1., 4., 4., 3., 6.]],

[[3., 4., 4., 5., 1., 7.],

[4., 4., 4., 1., 7., 9.],

[4., 4., 3., 7., 9., 1.],

[4., 3., 6., 9., 1., 4.]],

[[5., 1., 7., 0., 3., 1.],

[1., 7., 9., 3., 1., 4.],

[7., 9., 1., 1., 4., 3.],

[9., 1., 4., 4., 3., 5.]],

[[0., 3., 1., 1., 5., 5.],

[3., 1., 4., 5., 5., 4.],

[1., 4., 3., 5., 4., 9.],

[4., 3., 5., 4., 9., 6.]],

[[1., 5., 5., 3., 2., 8.],

[5., 5., 4., 2., 8., 9.],

[5., 4., 9., 8., 9., 0.],

[4., 9., 6., 9., 0., 6.]]]])

we only need one dot operation to obtain the convolution

pimg[0].dot(f[:,:,:,0].flatten())

array([[206., 192., 236., 220.],

[191., 202., 148., 151.],

[196., 182., 159., 151.],

[160., 214., 194., 159.],

[ 88., 143., 185., 166.],

[122., 145., 191., 217.],

[164., 243., 209., 216.]])

which we can compare with your previous function

convolution_byhand(img, f, activation=linear)[0,:,:,0]

array([[206., 192., 236., 220.],

[191., 202., 148., 151.],

[196., 182., 159., 151.],

[160., 214., 194., 159.],

[ 88., 143., 185., 166.],

[122., 145., 191., 217.],

[164., 243., 209., 216.]])

observe that:

resulting images in this example after convolution with any filter will have size 7x4

the resulting structure

pimghas at each pixel (in the 7x4 grid) a vector of six elements associated with it.this vector is the flattened contents of 2x3x1 image fragment located at that pixel that would by multiplied element by element by any filter located at that pixel during the convolution.

the first row in

pimgcorresponds to the flattened 2x3 fragment located at the top left corner ofimgthe second row contains the 2x3 fragment after shifting one pixel to the right.

we use the

np.flattenoperation.

COMPLETE the following function such that it prepares an image in this way, so that the convolution with a filter is just a dot operation. Where:

imgis the images array (assume we only have one image)fyandfxare the filter dimensions (2,3 in the example just above)

def prepare_img(img, fy, fx):

r = np.zeros( (1, img.shape[1]-fy+1, img.shape[2]-fx+1, fy*fx*img.shape[3] ))

for y in range(img.shape[1]-fy+1):

for x in range(img.shape[2]-fx+1):

r[0,y,x,:] = .. # YOUR CODE HERE

return r

test your code manually

pimg = prepare_img(img, *f.shape[:2])

print (pimg.shape)

pimg

Registra tu solución en linea

student.submit_task(namespace=globals(), task_id='T2');

TASK 3: Compute number of weights¶

Complete the following function so that it computes the number of weights of a convolutional architecture as specified in the arguments.

input_shape: the shape of the input imagefilters: a list of dictionaries, with one dictionary per convolutional layer specifying the number and size of the filters of the layer.dense_shapes: a list of integers, with one integer per dense layer specifying the number of neurons of the layer.

see the example below. YOU CANNOT USE TENSORFLOW in your implementation. Use the function tf_build_model below to understand the function arguments and check your implementation.

use VALID padding for the convolutional layers.

def compute_nb_weights(input_shape, filters, dense_shapes):

# YOUR CODE HERE

r = ...

return r

fs = [ {'n_filters': 90, 'size': 5}, {'n_filters': 15, 'size': 4}]

ds = [100,20,3]

input_shape = (100,100,3)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Conv2D, Input, Flatten

def tf_build_model(input_shape, filters, dense_shapes):

model = Sequential()

model.add(Input(shape=input_shape)) # 250x250 RGB images

for f in filters:

model.add(Conv2D(f['n_filters'], f['size'], strides=1, padding='VALID', activation="relu"))

model.add(Flatten())

for n in dense_shapes:

model.add(Dense(n))

return model

m = tf_build_model(input_shape, fs, ds)

m.summary()

Registra tu solución en linea

student.submit_task(namespace=globals(), task_id='T3');