LAB 4.2 - Transfer learning

Contents

LAB 4.2 - Transfer learning¶

!wget -nc --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/2021.deeplearning/main/content/init.py

import init; init.init(force_download=False);

from local.lib.rlxmoocapi import submit, session

session.LoginSequence(endpoint=init.endpoint, course_id=init.course_id, lab_id="L04.02", varname="student");

BE PATIENT IN THIS LAB, MODELS ARE LARGE AND MAY TAKE A WHILE TO DOWNLOAD, TRAIN, MAKE INFERENCE, and ALSO FOR GRADERS TO EVALUATE YOUR SUBMISSION.

You may want to set the runtime to GPU if in Google Colab

import tensorflow as tf

!pip install --upgrade tensorflow_hub

import tensorflow_hub as hub

import numpy as np

import matplotlib.pyplot as plt

from skimage.io import imread

import h5py

import pandas as pd

%matplotlib inline

TASK 1: tensorflow hub classification model¶

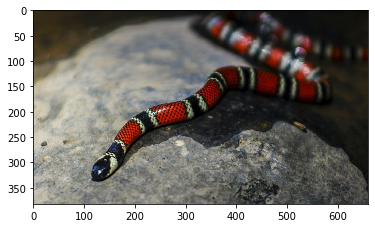

Complete the following function so that it uses the Inception V1 model on tensorflow hub to obtain the three top ImageNet labels for a given image, along with their probabilities. Observe that:

the input

imgis annp.arrayof dims[w,h,3]the model returns

logitswhich must be transformed into probabilities with a softmax function: \(p_i = \frac{e^{L_i}}{\sum_j e^{L_j}}\), where \(p_i\) is the probability of class \(i\) and \(L_i\) is thelogitassigned to that classyou must resize the input

imgto dimensions[1,224,224,3]. Useskimage.transform.resizewithanti_aliasing=True.you must ensure the pixel values of

imgare in the[0,1]range. Normalize the image pixel values by substracting the minimum value and dividing by the difference between the maximum and the minimum.the logits returned by the model correspond to the labels in this file as described in the tensorflob hub model page. This list is passed to your function in the

labels_listargument.you must return a dictionary with three elements, containing the class labels as keys and the probabilities as values.

For instance, for the following image you should return this dictionary:

{'king snake': 0.8190326,

'ringneck snake': 0.026169779,

'sea snake': 0.030096276}

snake_img = imread('local/imgs/snake.jpg')

plt.imshow(snake_img)

<matplotlib.image.AxesImage at 0x7f33e28aff10>

with open("local/data/ImageNetLabels.txt", "r") as f:

labels_list = [i.rstrip() for i in f.readlines()]

def get_top3_inceptionv1_labels(img, labels_list):

from skimage.transform import resize

m = ... # load the tensorflow hub model

rimg = ... # resize and reshape the image to [1,224,224,3]

rimg = ... # normalize the image to a [0,1] range

logits = ... # feed the image into the model to obtain the logits

probs = ... # convert logits to probabilities

r = ... # get the top 3 labels according to the probabilitys into a dict

return r

check your code with the image above

get_top3_inceptionv1_labels(snake_img, labels_list)

check your code with any image available on the internet

url_chimp = 'https://scx2.b-cdn.net/gfx/news/2019/canwereallyk.jpg'

!wget -q -O img.jpg $url_chimp

img = imread('img.jpg')

plt.imshow(img); plt.axis("off")

get_top3_inceptionv1_labels(img, labels_list)

{'chimpanzee': 0.96279883,

'hippopotamus': 0.0016488637,

'gorilla': 0.0013446786}

Registra tu solución en linea

student.submit_task(namespace=globals(), task_id='T1');

TASK 2: tensorflow hub feature extraction model¶

complete the following function such that when given a set of images returns the feature vectors extracted for each image with the InceptionV1 tensorflow hub model.

Observe that:

tensorflow hubpublishes a different model service for classification or for feature extractionimgscan be any array of shape[n_images, w, h, n_channels]the output must be of shape

[n_images, 1024]you must return a

numpyarray

!wget -nc -q https://s3.amazonaws.com/rlx/mini_cifar.h5

with h5py.File('mini_cifar.h5','r') as h5f:

x_cifar = h5f["x"][:][:1000]

y_cifar = h5f["y"][:][:1000]

x_cifar.shape, y_cifar.shape

((1000, 32, 32, 3), (1000,))

def feature_vector_inceptionv1(imgs):

# YOUR CODE HERE

features = ...

return features

observe we can extract the features of our mini cifar dataset

xf_cifar = feature_vector_inceptionv1(x_cifar)

xf_cifar.shape

check your code, this sum must be close to:

array([186.98825, 436.80975, 237.31288, 353.58902, 492.95978, 307.38007,

234.02158, 260.64508, 186.74268, 265.00177], dtype=float32)

xf_cifar.sum(axis=1)[:10]

array([186.9881 , 436.80994, 237.3129 , 353.58914, 492.95966, 307.38 ,

234.02158, 260.645 , 186.74266, 265.0017 ], dtype=float32)

observe we can do many things now with the features such as

compute similarity between images in this feature space

use any classical machine learning method

etc.

i,j = np.random.randint(len(xf_cifar), size=2)

imgA, imgB = x_cifar[i], x_cifar[j]

plt.figure(figsize=(4,2))

plt.subplot(121); plt.imshow(imgA); plt.axis("off")

plt.subplot(122); plt.imshow(imgB); plt.axis("off");

print ("similarity in feature space", np.linalg.norm(xf_cifar[i]-xf_cifar[j]))

similarity in feature space 41.228073

from sklearn.model_selection import cross_val_score

from sklearn.svm import SVC

estimator = SVC(gamma=.001)

cross_val_score(estimator, xf_cifar, y_cifar)

array([0.755, 0.73 , 0.775, 0.765, 0.73 ])

Registra tu solución en linea

student.submit_task(namespace=globals(), task_id='T2');

TASK 3: Model for fine tuning¶

Complete the following function so that it builds a model with three layers:

one

hub.KerasLayerwith https://tfhub.dev/tensorflow/efficientnet/b7/feature-vector/1 feature vector extraction andtrainableaccording to the function parameter.one

Denselayer withdense_sizeneurons andreluactivationone

Denselayer as output with 3 neurons (one for each class) andsoftmaxactivation

def create_model(trainable=False, dense_size=100):

num_classes = 3

m = tf.keras.Sequential([

... # YOUR CODE HERE

])

return m

with dense_size=100 and input_shape=[None, 32, 32, 3] your model should have a total of 64,354,083 parameters, with only 256,403 trainable params if trainable=False, and 64,043,363 if trainable=True

m = create_model(trainable=True)

m.build(input_shape=[None, 32, 32, 3])

m.summary()

Model: "sequential_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

keras_layer_4 (KerasLayer) (None, 2560) 64097680

_________________________________________________________________

dense (Dense) (None, 100) 256100

_________________________________________________________________

dense_1 (Dense) (None, 3) 303

=================================================================

Total params: 64,354,083

Trainable params: 64,043,363

Non-trainable params: 310,720

_________________________________________________________________

you can now train your model. If you set trainable=True you should get >80% accuracy in validation with 10-30 epochs

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(x_cifar, y_cifar, test_size=.2)

print(x_train.shape, y_train.shape, x_test.shape, y_test.shape)

(800, 32, 32, 3) (800,) (200, 32, 32, 3) (200,)

m.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

m.fit(x_train, y_train, epochs=10,batch_size=16, validation_data=(x_test, y_test))

Registra tu solución en linea

student.submit_task(namespace=globals(), task_id='T3');

----- grader message -------testing your code with sample imgs

correct

----------------------------