5.5 Sequences generation

Contents

5.5 Sequences generation¶

!wget -nc --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/2021.deeplearning/main/content/init.py

import init; init.init(force_download=False);

import sys

if 'google.colab' in sys.modules:

print ("setting tensorflow version in colab")

%tensorflow_version 2.x

%load_ext tensorboard

import tensorflow as tf

tf.__version__

Sampling¶

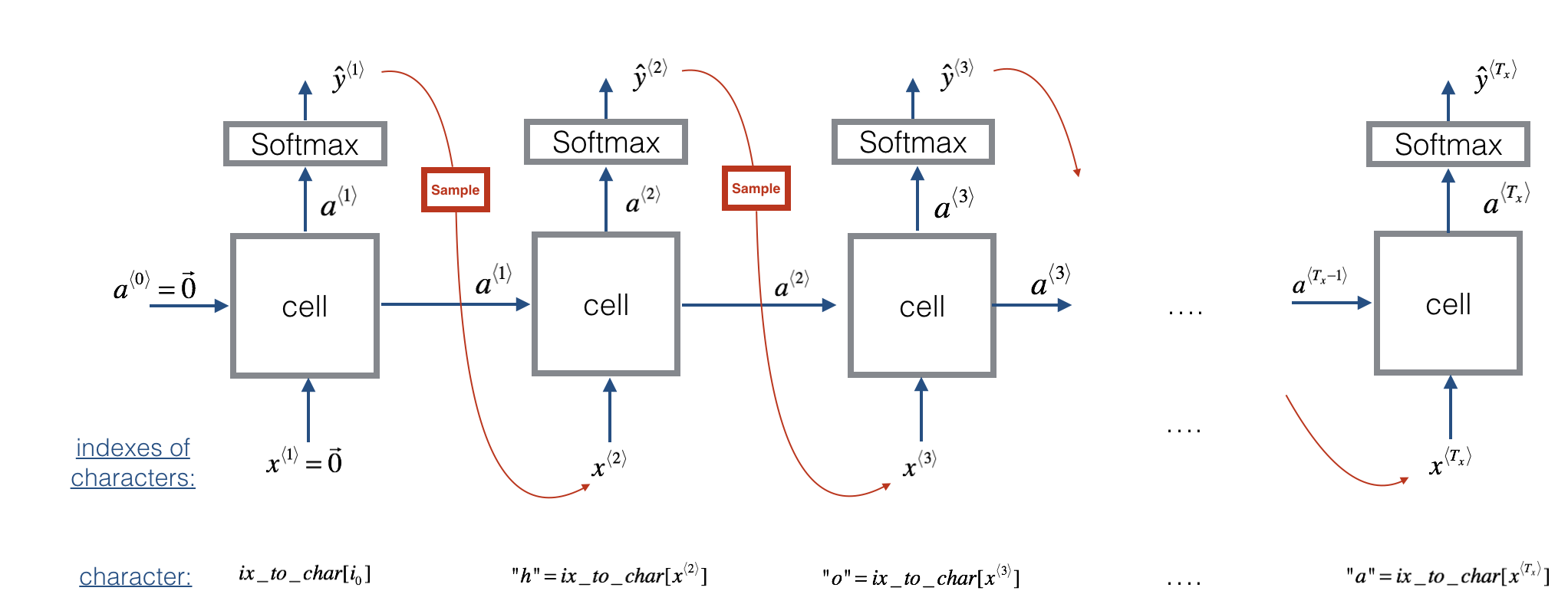

A recurrence Neural Network can be used as a Generative model once it was trained. Currently this is a common practice not only to study how well a model has learned a problem, but to learn more about the problem domain itself. In fact, this approach is being used for music generation and composition.

The process of generation is explained in the picture below:

from IPython.display import Image

Image(filename='local/imgs/dinos3.png', width=800)

#<img src="local/imgs/dinos3.png" style="width:500;height:300px;">

Let’s do an example:

import sys

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Masking

from tensorflow.keras.layers import Dropout

from tensorflow.keras.layers import LSTM

from tensorflow.keras.optimizers import RMSprop

from tensorflow.keras.callbacks import ModelCheckpoint

from keras.utils import np_utils

Using TensorFlow backend.

#This code is to fix a compatibility problem of TF 2.4 with some GPUs

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

import nltk

nltk.download('gutenberg')

[nltk_data] Downloading package gutenberg to /home/julian/nltk_data...

[nltk_data] Package gutenberg is already up-to-date!

True

# load ascii text and covert to lowercase

raw_text = nltk.corpus.gutenberg.raw('bible-kjv.txt')

raw_text[100:1000]

'Genesis\n\n\n1:1 In the beginning God created the heaven and the earth.\n\n1:2 And the earth was without form, and void; and darkness was upon\nthe face of the deep. And the Spirit of God moved upon the face of the\nwaters.\n\n1:3 And God said, Let there be light: and there was light.\n\n1:4 And God saw the light, that it was good: and God divided the light\nfrom the darkness.\n\n1:5 And God called the light Day, and the darkness he called Night.\nAnd the evening and the morning were the first day.\n\n1:6 And God said, Let there be a firmament in the midst of the waters,\nand let it divide the waters from the waters.\n\n1:7 And God made the firmament, and divided the waters which were\nunder the firmament from the waters which were above the firmament:\nand it was so.\n\n1:8 And God called the firmament Heaven. And the evening and the\nmorning were the second day.\n\n1:9 And God said, Let the waters under the heav'

# create mapping of unique chars to integers

chars = sorted(list(set(raw_text)))

char_to_int = dict((c, i) for i, c in enumerate(chars))

char_to_int

{'\n': 0,

' ': 1,

'!': 2,

"'": 3,

'(': 4,

')': 5,

',': 6,

'-': 7,

'.': 8,

'0': 9,

'1': 10,

'2': 11,

'3': 12,

'4': 13,

'5': 14,

'6': 15,

'7': 16,

'8': 17,

'9': 18,

':': 19,

';': 20,

'?': 21,

'A': 22,

'B': 23,

'C': 24,

'D': 25,

'E': 26,

'F': 27,

'G': 28,

'H': 29,

'I': 30,

'J': 31,

'K': 32,

'L': 33,

'M': 34,

'N': 35,

'O': 36,

'P': 37,

'Q': 38,

'R': 39,

'S': 40,

'T': 41,

'U': 42,

'V': 43,

'W': 44,

'Y': 45,

'Z': 46,

'[': 47,

']': 48,

'a': 49,

'b': 50,

'c': 51,

'd': 52,

'e': 53,

'f': 54,

'g': 55,

'h': 56,

'i': 57,

'j': 58,

'k': 59,

'l': 60,

'm': 61,

'n': 62,

'o': 63,

'p': 64,

'q': 65,

'r': 66,

's': 67,

't': 68,

'u': 69,

'v': 70,

'w': 71,

'x': 72,

'y': 73,

'z': 74}

n_chars = len(raw_text)

n_vocab = len(chars)

print("Total Characters: ", n_chars)

print("Total Vocab: ", n_vocab)

Total Characters: 4332554

Total Vocab: 75

To train the model we are going to use sequences of 60 characters and because of the data set is too large, we are going to use only the firs 200000 sequences.

# prepare the dataset of input to output pairs encoded as integers

seq_length = 60

dataX = []

dataY = []

n_chars = 200000

for i in range(0, n_chars - seq_length, 3):

seq_in = raw_text[i:i + seq_length]

seq_out = raw_text[i + seq_length]

dataX.append([char_to_int[char] for char in seq_in])

dataY.append(char_to_int[seq_out])

n_patterns = len(dataX)

print("Total Patterns: ", n_patterns)

Total Patterns: 66647

# reshape X to be [samples, time steps, features]

X = np.reshape(dataX, (n_patterns, seq_length, 1))

# normalize

X = X / float(n_vocab)

# one hot encode the output variable

y = np_utils.to_categorical(dataY)

int_to_char = dict((i, c) for i, c in enumerate(chars))

model = Sequential()

model.add(LSTM(256, input_shape=(X.shape[1], X.shape[2])))

model.add(Dense(y.shape[1], activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam')

X.shape

(66647, 60, 1)

Note that the entire dataset is used for training

model.fit(X, y, epochs=200, batch_size=128, verbose=1)

Epoch 1/200

521/521 [==============================] - 6s 8ms/step - loss: 3.2294

Epoch 2/200

521/521 [==============================] - 3s 5ms/step - loss: 2.8156

Epoch 3/200

521/521 [==============================] - 3s 7ms/step - loss: 2.6550

Epoch 4/200

521/521 [==============================] - 3s 6ms/step - loss: 2.5924

Epoch 5/200

521/521 [==============================] - 5s 9ms/step - loss: 2.5289

Epoch 6/200

521/521 [==============================] - 4s 8ms/step - loss: 2.4810

Epoch 7/200

521/521 [==============================] - 3s 7ms/step - loss: 2.4409

Epoch 8/200

521/521 [==============================] - 5s 10ms/step - loss: 2.4070

Epoch 9/200

521/521 [==============================] - 4s 8ms/step - loss: 2.3840

Epoch 10/200

521/521 [==============================] - 5s 9ms/step - loss: 2.3453

Epoch 11/200

521/521 [==============================] - 5s 9ms/step - loss: 2.3121

Epoch 12/200

521/521 [==============================] - 5s 9ms/step - loss: 2.2972

Epoch 13/200

521/521 [==============================] - 5s 9ms/step - loss: 2.2715

Epoch 14/200

521/521 [==============================] - 4s 8ms/step - loss: 2.2433

Epoch 15/200

521/521 [==============================] - 5s 9ms/step - loss: 2.2105

Epoch 16/200

521/521 [==============================] - 4s 8ms/step - loss: 2.2033

Epoch 17/200

521/521 [==============================] - 3s 6ms/step - loss: 2.1662

Epoch 18/200

521/521 [==============================] - 4s 8ms/step - loss: 2.1614

Epoch 19/200

521/521 [==============================] - 4s 8ms/step - loss: 2.1302

Epoch 20/200

521/521 [==============================] - 4s 8ms/step - loss: 2.1122

Epoch 21/200

521/521 [==============================] - 5s 9ms/step - loss: 2.0818

Epoch 22/200

521/521 [==============================] - 5s 9ms/step - loss: 2.0597

Epoch 23/200

521/521 [==============================] - 4s 8ms/step - loss: 2.0347

Epoch 24/200

521/521 [==============================] - 5s 10ms/step - loss: 2.0123

Epoch 25/200

521/521 [==============================] - 6s 11ms/step - loss: 2.0003

Epoch 26/200

521/521 [==============================] - 4s 8ms/step - loss: 1.9707

Epoch 27/200

521/521 [==============================] - 4s 7ms/step - loss: 1.9547

Epoch 28/200

521/521 [==============================] - 4s 7ms/step - loss: 1.9284

Epoch 29/200

521/521 [==============================] - 4s 8ms/step - loss: 1.9163

Epoch 30/200

521/521 [==============================] - 3s 5ms/step - loss: 1.8940

Epoch 31/200

521/521 [==============================] - 3s 6ms/step - loss: 1.8689

Epoch 32/200

521/521 [==============================] - 3s 5ms/step - loss: 1.8399

Epoch 33/200

521/521 [==============================] - 3s 6ms/step - loss: 1.8204

Epoch 34/200

521/521 [==============================] - 4s 7ms/step - loss: 1.8123

Epoch 35/200

521/521 [==============================] - 3s 6ms/step - loss: 1.7942

Epoch 36/200

521/521 [==============================] - 3s 6ms/step - loss: 1.7545

Epoch 37/200

521/521 [==============================] - 3s 6ms/step - loss: 1.7423

Epoch 38/200

521/521 [==============================] - 3s 6ms/step - loss: 1.7241

Epoch 39/200

521/521 [==============================] - 3s 5ms/step - loss: 1.7037

Epoch 40/200

521/521 [==============================] - 3s 6ms/step - loss: 1.6715

Epoch 41/200

521/521 [==============================] - 3s 6ms/step - loss: 1.6630

Epoch 42/200

521/521 [==============================] - 3s 5ms/step - loss: 1.6391

Epoch 43/200

521/521 [==============================] - 3s 6ms/step - loss: 1.6251

Epoch 44/200

521/521 [==============================] - 3s 5ms/step - loss: 1.5998

Epoch 45/200

521/521 [==============================] - 3s 5ms/step - loss: 1.5725

Epoch 46/200

521/521 [==============================] - 3s 7ms/step - loss: 1.5625

Epoch 47/200

521/521 [==============================] - 4s 7ms/step - loss: 1.5433

Epoch 48/200

521/521 [==============================] - 4s 8ms/step - loss: 1.5173

Epoch 49/200

521/521 [==============================] - 3s 6ms/step - loss: 1.5175

Epoch 50/200

521/521 [==============================] - 4s 7ms/step - loss: 1.4908

Epoch 51/200

521/521 [==============================] - 3s 6ms/step - loss: 1.4646

Epoch 52/200

521/521 [==============================] - 3s 5ms/step - loss: 1.4495

Epoch 53/200

521/521 [==============================] - 3s 6ms/step - loss: 1.4396

Epoch 54/200

521/521 [==============================] - 3s 7ms/step - loss: 1.4203

Epoch 55/200

521/521 [==============================] - 3s 6ms/step - loss: 1.4160

Epoch 56/200

521/521 [==============================] - 3s 6ms/step - loss: 1.3794

Epoch 57/200

521/521 [==============================] - 4s 7ms/step - loss: 1.3661

Epoch 58/200

521/521 [==============================] - 4s 7ms/step - loss: 1.3628

Epoch 59/200

521/521 [==============================] - 5s 9ms/step - loss: 1.3514

Epoch 60/200

521/521 [==============================] - 4s 7ms/step - loss: 1.3292

Epoch 61/200

521/521 [==============================] - 4s 7ms/step - loss: 1.3190

Epoch 62/200

521/521 [==============================] - 4s 7ms/step - loss: 1.3168

Epoch 63/200

521/521 [==============================] - 5s 9ms/step - loss: 1.2908

Epoch 64/200

521/521 [==============================] - 4s 7ms/step - loss: 1.2733

Epoch 65/200

521/521 [==============================] - 4s 8ms/step - loss: 1.2741

Epoch 66/200

521/521 [==============================] - 4s 8ms/step - loss: 1.2581

Epoch 67/200

521/521 [==============================] - 3s 6ms/step - loss: 1.2615

Epoch 68/200

521/521 [==============================] - 3s 6ms/step - loss: 1.3300

Epoch 69/200

521/521 [==============================] - 3s 5ms/step - loss: 1.3073

Epoch 70/200

521/521 [==============================] - 3s 5ms/step - loss: 1.2368

Epoch 71/200

521/521 [==============================] - 3s 5ms/step - loss: 1.2135

Epoch 72/200

521/521 [==============================] - 4s 8ms/step - loss: 1.1927

Epoch 73/200

521/521 [==============================] - 4s 8ms/step - loss: 1.1833

Epoch 74/200

521/521 [==============================] - 4s 8ms/step - loss: 1.1753

Epoch 75/200

521/521 [==============================] - 4s 8ms/step - loss: 1.1791

Epoch 76/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1697

Epoch 77/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1770

Epoch 78/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1577

Epoch 79/200

521/521 [==============================] - 4s 7ms/step - loss: 1.1238

Epoch 80/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1283

Epoch 81/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1239

Epoch 82/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1071

Epoch 83/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1245

Epoch 84/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0958

Epoch 85/200

521/521 [==============================] - 3s 6ms/step - loss: 1.0738

Epoch 86/200

521/521 [==============================] - 3s 6ms/step - loss: 1.0657

Epoch 87/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0716

Epoch 88/200

521/521 [==============================] - 4s 8ms/step - loss: 1.0861

Epoch 89/200

521/521 [==============================] - 3s 6ms/step - loss: 1.0640

Epoch 90/200

521/521 [==============================] - 3s 6ms/step - loss: 1.0391

Epoch 91/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0259

Epoch 92/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0702

Epoch 93/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0005

Epoch 94/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0025

Epoch 95/200

521/521 [==============================] - 4s 7ms/step - loss: 0.9959

Epoch 96/200

521/521 [==============================] - 3s 5ms/step - loss: 0.9941

Epoch 97/200

521/521 [==============================] - 4s 7ms/step - loss: 0.9868

Epoch 98/200

521/521 [==============================] - 4s 7ms/step - loss: 1.0211

Epoch 99/200

521/521 [==============================] - 4s 7ms/step - loss: 0.9525

Epoch 100/200

521/521 [==============================] - 5s 9ms/step - loss: 0.9613

Epoch 101/200

521/521 [==============================] - 5s 9ms/step - loss: 0.9612

Epoch 102/200

521/521 [==============================] - 3s 6ms/step - loss: 0.9432

Epoch 103/200

521/521 [==============================] - 4s 8ms/step - loss: 0.9590

Epoch 104/200

521/521 [==============================] - 5s 9ms/step - loss: 0.9434

Epoch 105/200

521/521 [==============================] - 4s 8ms/step - loss: 0.9466

Epoch 106/200

521/521 [==============================] - 4s 8ms/step - loss: 0.9258

Epoch 107/200

521/521 [==============================] - 5s 9ms/step - loss: 0.9225

Epoch 108/200

521/521 [==============================] - 4s 8ms/step - loss: 0.9239

Epoch 109/200

521/521 [==============================] - 5s 9ms/step - loss: 0.9067

Epoch 110/200

521/521 [==============================] - 5s 11ms/step - loss: 0.8950

Epoch 111/200

521/521 [==============================] - 5s 9ms/step - loss: 0.9142

Epoch 112/200

521/521 [==============================] - 5s 10ms/step - loss: 0.8786

Epoch 113/200

521/521 [==============================] - 5s 9ms/step - loss: 0.8703

Epoch 114/200

521/521 [==============================] - 5s 10ms/step - loss: 0.8730

Epoch 115/200

521/521 [==============================] - 4s 8ms/step - loss: 0.8649

Epoch 116/200

521/521 [==============================] - 5s 9ms/step - loss: 0.8649

Epoch 117/200

521/521 [==============================] - 4s 8ms/step - loss: 0.8481

Epoch 118/200

521/521 [==============================] - 3s 6ms/step - loss: 0.8408

Epoch 119/200

521/521 [==============================] - 5s 9ms/step - loss: 0.8518

Epoch 120/200

521/521 [==============================] - 4s 8ms/step - loss: 0.8585

Epoch 121/200

521/521 [==============================] - 4s 7ms/step - loss: 0.8506

Epoch 122/200

521/521 [==============================] - 5s 9ms/step - loss: 0.8088

Epoch 123/200

521/521 [==============================] - 5s 10ms/step - loss: 0.8321

Epoch 124/200

521/521 [==============================] - 5s 9ms/step - loss: 0.8818

Epoch 125/200

521/521 [==============================] - 4s 8ms/step - loss: 0.8057

Epoch 126/200

521/521 [==============================] - 5s 10ms/step - loss: 0.8122

Epoch 127/200

521/521 [==============================] - 4s 8ms/step - loss: 0.8208

Epoch 128/200

521/521 [==============================] - 5s 9ms/step - loss: 0.7973

Epoch 129/200

521/521 [==============================] - 5s 9ms/step - loss: 0.7867

Epoch 130/200

521/521 [==============================] - 3s 6ms/step - loss: 0.8401

Epoch 131/200

521/521 [==============================] - 4s 7ms/step - loss: 0.8098

Epoch 132/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7664

Epoch 133/200

521/521 [==============================] - 3s 7ms/step - loss: 0.7790

Epoch 134/200

521/521 [==============================] - 3s 7ms/step - loss: 0.7787

Epoch 135/200

521/521 [==============================] - 6s 11ms/step - loss: 0.7645

Epoch 136/200

521/521 [==============================] - 4s 8ms/step - loss: 0.8066

Epoch 137/200

521/521 [==============================] - 5s 9ms/step - loss: 0.8463

Epoch 138/200

521/521 [==============================] - 4s 8ms/step - loss: 0.7783

Epoch 139/200

521/521 [==============================] - 4s 8ms/step - loss: 0.7693

Epoch 140/200

521/521 [==============================] - 4s 8ms/step - loss: 0.7327

Epoch 141/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7230

Epoch 142/200

521/521 [==============================] - 4s 8ms/step - loss: 0.7498

Epoch 143/200

521/521 [==============================] - 4s 8ms/step - loss: 0.7597

Epoch 144/200

521/521 [==============================] - 4s 8ms/step - loss: 0.7823

Epoch 145/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7268

Epoch 146/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7482

Epoch 147/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7101

Epoch 148/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7199

Epoch 149/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7128

Epoch 150/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7161

Epoch 151/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7046

Epoch 152/200

521/521 [==============================] - 4s 8ms/step - loss: 0.6901

Epoch 153/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7059

Epoch 154/200

521/521 [==============================] - 3s 5ms/step - loss: 0.6859

Epoch 155/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7286

Epoch 156/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7667

Epoch 157/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6656

Epoch 158/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6651

Epoch 159/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6823

Epoch 160/200

521/521 [==============================] - 3s 6ms/step - loss: 1.1258

Epoch 161/200

521/521 [==============================] - 3s 5ms/step - loss: 0.6587

Epoch 162/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6688

Epoch 163/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6616

Epoch 164/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6522

Epoch 165/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6574

Epoch 166/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6765

Epoch 167/200

521/521 [==============================] - 3s 5ms/step - loss: 0.6188

Epoch 168/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6417

Epoch 169/200

521/521 [==============================] - 4s 8ms/step - loss: 0.6775

Epoch 170/200

521/521 [==============================] - 4s 7ms/step - loss: 0.7435

Epoch 171/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6036

Epoch 172/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6266

Epoch 173/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6163

Epoch 174/200

521/521 [==============================] - 3s 5ms/step - loss: 0.6069

Epoch 175/200

521/521 [==============================] - 4s 8ms/step - loss: 0.6732

Epoch 176/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6543

Epoch 177/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6134

Epoch 178/200

521/521 [==============================] - 4s 8ms/step - loss: 0.5893

Epoch 179/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6064

Epoch 180/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6139

Epoch 181/200

521/521 [==============================] - 3s 6ms/step - loss: 0.7111

Epoch 182/200

521/521 [==============================] - 3s 7ms/step - loss: 0.6698

Epoch 183/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6161

Epoch 184/200

521/521 [==============================] - 3s 6ms/step - loss: 0.5821

Epoch 185/200

521/521 [==============================] - 4s 8ms/step - loss: 0.5722

Epoch 186/200

521/521 [==============================] - 4s 7ms/step - loss: 0.5964

Epoch 187/200

521/521 [==============================] - 3s 5ms/step - loss: 0.5999

Epoch 188/200

521/521 [==============================] - 3s 6ms/step - loss: 0.6639

Epoch 189/200

521/521 [==============================] - 4s 8ms/step - loss: 0.5951

Epoch 190/200

521/521 [==============================] - 4s 7ms/step - loss: 0.6253

Epoch 191/200

521/521 [==============================] - 4s 8ms/step - loss: 0.5794

Epoch 192/200

521/521 [==============================] - 3s 6ms/step - loss: 0.5785

Epoch 193/200

521/521 [==============================] - 3s 5ms/step - loss: 0.5747

Epoch 194/200

521/521 [==============================] - 4s 8ms/step - loss: 0.5823

Epoch 195/200

521/521 [==============================] - 3s 7ms/step - loss: 0.5734

Epoch 196/200

521/521 [==============================] - 4s 9ms/step - loss: 0.5941

Epoch 197/200

521/521 [==============================] - 4s 7ms/step - loss: 0.8470

Epoch 198/200

521/521 [==============================] - 4s 8ms/step - loss: 0.5750

Epoch 199/200

521/521 [==============================] - 4s 7ms/step - loss: 0.5531

Epoch 200/200

521/521 [==============================] - 4s 7ms/step - loss: 0.5230

<tensorflow.python.keras.callbacks.History at 0x7fc1405288d0>

int_to_char = dict((i, c) for i, c in enumerate(chars))

# pick a random seed

start = np.random.randint(0, len(dataX)-1)

pattern = dataX[start]

print("Seed:")

print("\"", ''.join([int_to_char[value] for value in pattern]), "\"")

# generate characters

for i in range(1000):

x = np.reshape(pattern, (1, len(pattern), 1))

x = x / float(n_vocab)

prediction = model.predict(x, verbose=0)

index = np.argmax(prediction)

result = int_to_char[index]

seq_in = [int_to_char[value] for value in pattern]

sys.stdout.write(result)

pattern.append(index)

pattern = pattern[1:len(pattern)]

print("\nDone.")

Seed:

" s, saying, Jacob hath taken

away all that was our father's; "

and he hoeeml in uher ph thi liude tf mrte iv mes.hrst.

20:3 And the senea tayt,ov Suonpha, whei sho ln hed teme upene thkt the gare of thed,

6::7 Tnenef tha woin oa Sebha Icble tee Bumpae,ii Noarcrhr,

26:2 And Eoruel dngu, and Hamaa' and Jaiae, and Jahlap, and Jedmah, and Tekah,

and Tekaha, and Teblhc: 36:1 And AnzaNa aid Bihi tii teie shecn ia haa mam, and toet hem: ant there Ara cadh hamk aw uhr fnadmt'onth mi and seee in pas end hete it

unto mh.

and siat kere hete tham

20:61 Nnt mhe elies wf Loau,w gifser Gamoh ae shen N bayerees tvto the eeyh of my cirthed.

and dare nl uhe

drd and hit iind fo?thor hidst, and he tas teet it wate

tp tef khrhe if mae wo hr deci;s aarhmt.

15:54 And the men woie oht wwon thes hs the coes of Rodn: anr the same grsmd mor finye fir

and hes is c phlel sf dr ters woan

fo hanher; aea uh tyelk ny sinv wha hrued afuer hfm,

32:18 And the men woie on ham tien, sh c mivt ma dadkte to doean thth my, theke wer betsle grmme abseer rnw thy natterts,

tha

Done.

The result is not what we expected mainly because of three resons:¶

The model requires to be trained with a larger dataset in order to better capture the dynamics of the language.

During validation it is not recommendable to select the output with maximum probability but to use the output distribution as parameters to sample from a multinomial distribution. This avoid the model to get stuck in a loop.

A more flexible model with more data could get better results.

def sample(preds, temperature=1.0):

# helper function to sample an index from a probability array

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

Using a more complex model with the whole dataset¶

This problem is complex computationally speaking, so the next model was run using GPU.

batch_size = 128

model = Sequential()

model.add(LSTM(256, input_shape=(X.shape[1], X.shape[2]), return_sequences=True))

model.add(Dropout(rate=0.2))

model.add(LSTM(256))

model.add(Dropout(rate=0.2))

model.add(Dense(y.shape[1], activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam')

More data could produce memory erros so we have to create a data_generator function for the problem:

class KerasBatchGenerator(object):

def __init__(self, data, num_steps, batch_size, vocabulary, skip_step=1):

self.data = data

self.num_steps = num_steps

self.batch_size = batch_size

self.vocabulary = vocabulary

# this will track the progress of the batches sequentially through the

# data set - once the data reaches the end of the data set it will reset

# back to zero

self.current_idx = 0

# skip_step is the number of words which will be skipped before the next

# batch is skimmed from the data set

self.skip_step = skip_step

def generate(self):

x = np.zeros((self.batch_size, self.num_steps, 1))

y = np.zeros((self.batch_size, self.vocabulary))

while True:

for i in range(self.batch_size):

if self.current_idx + self.num_steps >= len(self.data):

# reset the index back to the start of the data set

self.current_idx = 0

seq_in = self.data[self.current_idx:self.current_idx + self.num_steps]

x[i, :, 0] = np.array([char_to_int[char] for char in seq_in])/ float(n_vocab)

seq_out = self.data[self.current_idx + self.num_steps]

temp_y = char_to_int[seq_out]

# convert all of temp_y into a one hot representation

y[i, :] = np_utils.to_categorical(temp_y, num_classes=self.vocabulary)

self.current_idx += self.skip_step

yield x, y

batch_size = 128

train_data_generator = KerasBatchGenerator(raw_text, seq_length, batch_size, n_vocab, skip_step=3)

#model.fit_generator(train_data_generator.generate(), epochs=30, steps_per_epoch=n_chars/batch_size)

model.fit(X, y, epochs=200, batch_size=32)

Epoch 1/200

2083/2083 [==============================] - 26s 12ms/step - loss: 3.0367

Epoch 2/200

2083/2083 [==============================] - 24s 11ms/step - loss: 2.5037

Epoch 3/200

2083/2083 [==============================] - 25s 12ms/step - loss: 2.3439

Epoch 4/200

2083/2083 [==============================] - 27s 13ms/step - loss: 2.2347

Epoch 5/200

2083/2083 [==============================] - 26s 12ms/step - loss: 2.1483

Epoch 6/200

2083/2083 [==============================] - 24s 11ms/step - loss: 2.0631

Epoch 7/200

2083/2083 [==============================] - 23s 11ms/step - loss: 1.9892

Epoch 8/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.9368

Epoch 9/200

2083/2083 [==============================] - 32s 16ms/step - loss: 1.8735

Epoch 10/200

2083/2083 [==============================] - 28s 13ms/step - loss: 1.8218

Epoch 11/200

2083/2083 [==============================] - 29s 14ms/step - loss: 1.7650

Epoch 12/200

2083/2083 [==============================] - 26s 12ms/step - loss: 1.7209

Epoch 13/200

2083/2083 [==============================] - 34s 16ms/step - loss: 1.6791

Epoch 14/200

2083/2083 [==============================] - 29s 14ms/step - loss: 1.6435

Epoch 15/200

2083/2083 [==============================] - 22s 11ms/step - loss: 1.5907

Epoch 16/200

2083/2083 [==============================] - 28s 14ms/step - loss: 1.5612

Epoch 17/200

2083/2083 [==============================] - 21s 10ms/step - loss: 1.5159

Epoch 18/200

2083/2083 [==============================] - 20s 10ms/step - loss: 1.5025

Epoch 19/200

2083/2083 [==============================] - 20s 10ms/step - loss: 1.4749

Epoch 20/200

2083/2083 [==============================] - 22s 10ms/step - loss: 1.4397

Epoch 21/200

2083/2083 [==============================] - 30s 14ms/step - loss: 1.3992

Epoch 22/200

2083/2083 [==============================] - 23s 11ms/step - loss: 1.3764

Epoch 23/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.3350

Epoch 24/200

2083/2083 [==============================] - 28s 14ms/step - loss: 1.3210

Epoch 25/200

2083/2083 [==============================] - 28s 14ms/step - loss: 1.2972

Epoch 26/200

2083/2083 [==============================] - 28s 14ms/step - loss: 1.2743

Epoch 27/200

2083/2083 [==============================] - 28s 13ms/step - loss: 1.2628

Epoch 28/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.2338

Epoch 29/200

2083/2083 [==============================] - 24s 11ms/step - loss: 1.2126

Epoch 30/200

2083/2083 [==============================] - 29s 14ms/step - loss: 1.1936

Epoch 31/200

2083/2083 [==============================] - 30s 14ms/step - loss: 1.1889

Epoch 32/200

2083/2083 [==============================] - 30s 14ms/step - loss: 1.1614

Epoch 33/200

2083/2083 [==============================] - 29s 14ms/step - loss: 1.1415

Epoch 34/200

2083/2083 [==============================] - 33s 16ms/step - loss: 1.1335

Epoch 35/200

2083/2083 [==============================] - 21s 10ms/step - loss: 1.1311

Epoch 36/200

2083/2083 [==============================] - 22s 11ms/step - loss: 1.1187

Epoch 37/200

2083/2083 [==============================] - 34s 16ms/step - loss: 1.1038

Epoch 38/200

2083/2083 [==============================] - 36s 17ms/step - loss: 1.0968

Epoch 39/200

2083/2083 [==============================] - 26s 13ms/step - loss: 1.0790

Epoch 40/200

2083/2083 [==============================] - 31s 15ms/step - loss: 1.0745

Epoch 41/200

2083/2083 [==============================] - 28s 13ms/step - loss: 1.0655

Epoch 42/200

2083/2083 [==============================] - 21s 10ms/step - loss: 1.0480

Epoch 43/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.0453

Epoch 44/200

2083/2083 [==============================] - 32s 16ms/step - loss: 1.0395

Epoch 45/200

2083/2083 [==============================] - 35s 17ms/step - loss: 1.0238

Epoch 46/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.0261

Epoch 47/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.0142

Epoch 48/200

2083/2083 [==============================] - 22s 11ms/step - loss: 1.0148

Epoch 49/200

2083/2083 [==============================] - 25s 12ms/step - loss: 0.9909

Epoch 50/200

2083/2083 [==============================] - 21s 10ms/step - loss: 0.9929

Epoch 51/200

2083/2083 [==============================] - 28s 13ms/step - loss: 0.9913

Epoch 52/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9861

Epoch 53/200

2083/2083 [==============================] - 35s 17ms/step - loss: 0.9852

Epoch 54/200

2083/2083 [==============================] - 32s 15ms/step - loss: 0.9682

Epoch 55/200

2083/2083 [==============================] - 32s 15ms/step - loss: 0.9794

Epoch 56/200

2083/2083 [==============================] - 36s 17ms/step - loss: 0.9594

Epoch 57/200

2083/2083 [==============================] - 37s 18ms/step - loss: 0.9570

Epoch 58/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9623

Epoch 59/200

2083/2083 [==============================] - 37s 18ms/step - loss: 0.9515

Epoch 60/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9419

Epoch 61/200

2083/2083 [==============================] - 36s 17ms/step - loss: 0.9560

Epoch 62/200

2083/2083 [==============================] - 33s 16ms/step - loss: 0.9503

Epoch 63/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9428

Epoch 64/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9426

Epoch 65/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9448

Epoch 66/200

2083/2083 [==============================] - 26s 13ms/step - loss: 0.9434

Epoch 67/200

2083/2083 [==============================] - 32s 15ms/step - loss: 0.9406

Epoch 68/200

2083/2083 [==============================] - 33s 16ms/step - loss: 0.9244

Epoch 69/200

2083/2083 [==============================] - 35s 17ms/step - loss: 0.9323

Epoch 70/200

2083/2083 [==============================] - 38s 18ms/step - loss: 0.9361

Epoch 71/200

2083/2083 [==============================] - 33s 16ms/step - loss: 0.9252

Epoch 72/200

2083/2083 [==============================] - 36s 17ms/step - loss: 0.9325

Epoch 73/200

2083/2083 [==============================] - 34s 16ms/step - loss: 0.9312

Epoch 74/200

2083/2083 [==============================] - 30s 15ms/step - loss: 0.9343

Epoch 75/200

2083/2083 [==============================] - 33s 16ms/step - loss: 0.9346

Epoch 76/200

2083/2083 [==============================] - 36s 17ms/step - loss: 0.9198

Epoch 77/200

2083/2083 [==============================] - 35s 17ms/step - loss: 0.9331

Epoch 78/200

2083/2083 [==============================] - 34s 16ms/step - loss: 1.7209

Epoch 79/200

2083/2083 [==============================] - 37s 18ms/step - loss: 3.7659

Epoch 80/200

2083/2083 [==============================] - 32s 15ms/step - loss: 3.1665

Epoch 81/200

2083/2083 [==============================] - 36s 17ms/step - loss: 2.8868

Epoch 82/200

2083/2083 [==============================] - 30s 15ms/step - loss: 2.7156

Epoch 83/200

2083/2083 [==============================] - 35s 17ms/step - loss: 2.6041

Epoch 84/200

2083/2083 [==============================] - 37s 18ms/step - loss: 2.5368

Epoch 85/200

2083/2083 [==============================] - 32s 16ms/step - loss: 2.4605

Epoch 86/200

2083/2083 [==============================] - 25s 12ms/step - loss: 2.3947

Epoch 87/200

2083/2083 [==============================] - 31s 15ms/step - loss: 2.3747

Epoch 88/200

2083/2083 [==============================] - 36s 17ms/step - loss: 2.3057

Epoch 89/200

2083/2083 [==============================] - 31s 15ms/step - loss: 2.2391

Epoch 90/200

2083/2083 [==============================] - 34s 16ms/step - loss: 2.1357

Epoch 91/200

2083/2083 [==============================] - 32s 16ms/step - loss: 2.0559

Epoch 92/200

2083/2083 [==============================] - 35s 17ms/step - loss: 1.9769

Epoch 93/200

2083/2083 [==============================] - 29s 14ms/step - loss: 1.9188

Epoch 94/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.8637

Epoch 95/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.7961

Epoch 96/200

2083/2083 [==============================] - 41s 20ms/step - loss: 1.7647

Epoch 97/200

2083/2083 [==============================] - 41s 20ms/step - loss: 1.7186

Epoch 98/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.6773

Epoch 99/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.6338

Epoch 100/200

2083/2083 [==============================] - 36s 17ms/step - loss: 1.6125

Epoch 101/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.5730

Epoch 102/200

2083/2083 [==============================] - 36s 17ms/step - loss: 1.5506

Epoch 103/200

2083/2083 [==============================] - 36s 17ms/step - loss: 1.5189

Epoch 104/200

2083/2083 [==============================] - 40s 19ms/step - loss: 1.5105

Epoch 105/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.4798

Epoch 106/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.4562

Epoch 107/200

2083/2083 [==============================] - 41s 20ms/step - loss: 1.5138

Epoch 108/200

2083/2083 [==============================] - 36s 17ms/step - loss: 1.4241

Epoch 109/200

2083/2083 [==============================] - 38s 18ms/step - loss: 1.4028

Epoch 110/200

2083/2083 [==============================] - 41s 20ms/step - loss: 1.3790

Epoch 111/200

2083/2083 [==============================] - 38s 18ms/step - loss: 1.3591

Epoch 112/200

2083/2083 [==============================] - 39s 18ms/step - loss: 1.3491

Epoch 113/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.3394

Epoch 114/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.3289

Epoch 115/200

2083/2083 [==============================] - 41s 20ms/step - loss: 1.3135

Epoch 116/200

2083/2083 [==============================] - 38s 18ms/step - loss: 1.2875

Epoch 117/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.2790

Epoch 118/200

2083/2083 [==============================] - 40s 19ms/step - loss: 1.2759

Epoch 119/200

2083/2083 [==============================] - 32s 15ms/step - loss: 1.2641

Epoch 120/200

2083/2083 [==============================] - 40s 19ms/step - loss: 1.2541

Epoch 121/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.2385

Epoch 122/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.2549

Epoch 123/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.2160

Epoch 124/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.2087

Epoch 125/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.2009

Epoch 126/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.1916

Epoch 127/200

2083/2083 [==============================] - 38s 18ms/step - loss: 1.1804

Epoch 128/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.1824

Epoch 129/200

2083/2083 [==============================] - 31s 15ms/step - loss: 1.1581

Epoch 130/200

2083/2083 [==============================] - 28s 14ms/step - loss: 1.1485

Epoch 131/200

2083/2083 [==============================] - 34s 16ms/step - loss: 1.1538

Epoch 132/200

2083/2083 [==============================] - 35s 17ms/step - loss: 1.1451

Epoch 133/200

2083/2083 [==============================] - 32s 15ms/step - loss: 1.1317

Epoch 134/200

2083/2083 [==============================] - 31s 15ms/step - loss: 1.2675

Epoch 135/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.5304

Epoch 136/200

2083/2083 [==============================] - 35s 17ms/step - loss: 1.5561

Epoch 137/200

2083/2083 [==============================] - 39s 19ms/step - loss: 1.2676

Epoch 138/200

2083/2083 [==============================] - 33s 16ms/step - loss: 1.1609

Epoch 139/200

2083/2083 [==============================] - 37s 18ms/step - loss: 1.1298

Epoch 140/200

2083/2083 [==============================] - 34s 16ms/step - loss: 1.1255

Epoch 141/200

2083/2083 [==============================] - 33s 16ms/step - loss: 1.1045

Epoch 142/200

2083/2083 [==============================] - 38s 18ms/step - loss: 1.1079

Epoch 143/200

2083/2083 [==============================] - 32s 15ms/step - loss: 1.1177

Epoch 144/200

2083/2083 [==============================] - 28s 13ms/step - loss: 1.1079

Epoch 145/200

2083/2083 [==============================] - 32s 15ms/step - loss: 1.1024

Epoch 146/200

2083/2083 [==============================] - 38s 18ms/step - loss: 1.1011

Epoch 147/200

2083/2083 [==============================] - 31s 15ms/step - loss: 1.0886

Epoch 148/200

2083/2083 [==============================] - 26s 13ms/step - loss: 1.0800

Epoch 149/200

2083/2083 [==============================] - 32s 15ms/step - loss: 1.0748

Epoch 150/200

2083/2083 [==============================] - 26s 13ms/step - loss: 1.1929

Epoch 151/200

2083/2083 [==============================] - 22s 11ms/step - loss: 1.1015

Epoch 152/200

2083/2083 [==============================] - 28s 13ms/step - loss: 1.0581

Epoch 153/200

2083/2083 [==============================] - 27s 13ms/step - loss: 1.7733

Epoch 154/200

2083/2083 [==============================] - 22s 11ms/step - loss: 4.7665

Epoch 155/200

2083/2083 [==============================] - 27s 13ms/step - loss: 3.7679

Epoch 156/200

2083/2083 [==============================] - 23s 11ms/step - loss: 3.0387

Epoch 157/200

2083/2083 [==============================] - 24s 12ms/step - loss: 2.7524

Epoch 158/200

2083/2083 [==============================] - 25s 12ms/step - loss: 2.4446

Epoch 159/200

2083/2083 [==============================] - 18s 9ms/step - loss: 1.6476

Epoch 160/200

2083/2083 [==============================] - 25s 12ms/step - loss: 1.3003

Epoch 161/200

2083/2083 [==============================] - 17s 8ms/step - loss: 1.1860

Epoch 162/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.1493

Epoch 163/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.1235

Epoch 164/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.0983

Epoch 165/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.0867

Epoch 166/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.0771

Epoch 167/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.0721

Epoch 168/200

2083/2083 [==============================] - 14s 7ms/step - loss: 1.0495

Epoch 169/200

2083/2083 [==============================] - 22s 11ms/step - loss: 1.0529

Epoch 170/200

2083/2083 [==============================] - 20s 10ms/step - loss: 1.0311

Epoch 171/200

2083/2083 [==============================] - 23s 11ms/step - loss: 1.0332

Epoch 172/200

2083/2083 [==============================] - 21s 10ms/step - loss: 1.0385

Epoch 173/200

2083/2083 [==============================] - 20s 10ms/step - loss: 1.0314

Epoch 174/200

2083/2083 [==============================] - 22s 10ms/step - loss: 1.0018

Epoch 175/200

2083/2083 [==============================] - 20s 10ms/step - loss: 1.0124

Epoch 176/200

2083/2083 [==============================] - 21s 10ms/step - loss: 1.0036

Epoch 177/200

2083/2083 [==============================] - 22s 11ms/step - loss: 0.9950

Epoch 178/200

2083/2083 [==============================] - 23s 11ms/step - loss: 0.9993

Epoch 179/200

2083/2083 [==============================] - 21s 10ms/step - loss: 0.9909

Epoch 180/200

2083/2083 [==============================] - 32s 15ms/step - loss: 0.9957

Epoch 181/200

2083/2083 [==============================] - 22s 10ms/step - loss: 0.9966

Epoch 182/200

2083/2083 [==============================] - 24s 12ms/step - loss: 0.9896

Epoch 183/200

2083/2083 [==============================] - 23s 11ms/step - loss: 0.9803

Epoch 184/200

2083/2083 [==============================] - 23s 11ms/step - loss: 0.9797

Epoch 185/200

2083/2083 [==============================] - 24s 12ms/step - loss: 0.9621

Epoch 186/200

2083/2083 [==============================] - 22s 11ms/step - loss: 0.9808

Epoch 187/200

2083/2083 [==============================] - 22s 11ms/step - loss: 0.9747

Epoch 188/200

2083/2083 [==============================] - 28s 14ms/step - loss: 0.9642

Epoch 189/200

2083/2083 [==============================] - 28s 14ms/step - loss: 0.9633

Epoch 190/200

2083/2083 [==============================] - 29s 14ms/step - loss: 0.9460

Epoch 191/200

2083/2083 [==============================] - 25s 12ms/step - loss: 0.9605

Epoch 192/200

2083/2083 [==============================] - 29s 14ms/step - loss: 0.9480

Epoch 193/200

2083/2083 [==============================] - 26s 13ms/step - loss: 0.9451

Epoch 194/200

2083/2083 [==============================] - 19s 9ms/step - loss: 0.9382

Epoch 195/200

2083/2083 [==============================] - 20s 10ms/step - loss: 0.9595

Epoch 196/200

2083/2083 [==============================] - 24s 11ms/step - loss: 0.9227

Epoch 197/200

2083/2083 [==============================] - 24s 11ms/step - loss: 0.9242

Epoch 198/200

2083/2083 [==============================] - 17s 8ms/step - loss: 0.9273

Epoch 199/200

2083/2083 [==============================] - 20s 10ms/step - loss: 0.9230

Epoch 200/200

2083/2083 [==============================] - 23s 11ms/step - loss: 0.9315

<tensorflow.python.keras.callbacks.History at 0x7fc096a35150>

As we saw in previous classes, the model trained using batch_input_shape requires a similar batch for validation, so in order to evaluate the model using a single sequence, we have to create a new model with a batch_size = 1 and pass on the learnt weights of the first model to the new one.

# re-define the batch size

n_batch = 1

# re-define model

new_model = Sequential()

new_model.add(LSTM(256, batch_input_shape=(n_batch, X.shape[1], X.shape[2]), return_sequences=True))

new_model.add(Dropout(rate=0.2))

new_model.add(LSTM(256))

new_model.add(Dropout(rate=0.2))

new_model.add(Dense(y.shape[1], activation='softmax'))

# copy weights

old_weights = model.get_weights()

new_model.set_weights(old_weights)

# compile model

new_model.compile(loss='categorical_crossentropy', optimizer='adam')

# pick a random seed

start = np.random.randint(0, len(dataX)-1)

pattern = dataX[start]

print("Seed:")

print("\"", ''.join([int_to_char[value] for value in pattern]), "\"")

# generate characters

for i in range(1000):

x = np.reshape(pattern, (1, seq_length, 1))

x = x / float(n_vocab)

prediction = new_model.predict(x, verbose=0)[0]

index = sample(prediction, 0.3)

result = int_to_char[index]

seq_in = [int_to_char[value] for value in pattern]

sys.stdout.write(result)

pattern.append(index)

pattern = pattern[1:len(pattern)]

print("\nDone.")

Seed:

" e said, Is not he rightly named Jacob? for he hath

supplante "

d him anl that which

/home/julian/.local/lib/python3.7/site-packages/ipykernel_launcher.py:4: RuntimeWarning: divide by zero encountered in log

after removing the cwd from sys.path.

was in his son.

26:15 And the seven oe the man of Earaham stoedn them ont, and grom the land of Cgypt.

40:48 And the waters were iim to the len of the darth, and the sons of Esau, and the cameed a samoon in the land of Cgypt.

44:48 And the seventg day wat anl that he had mado of to drenk aerrreng, and the camees,

and the famene which he had spoken of the man; and he said, She caughter ald the diildren of Searaoh iis sesvants,

and said unto him, Where oe my mister Aaraham and the food of the land of Cgypt, and the wasers were the seven go twene betuid, and sent me to thei fown to the mend of Cgypt, and seid unto him, There on the land of Cgypt, and with thee and the men shall be mnten.

34:32 And the cemer were there things, and taid unto him, There oe my mister said unto him, Tho hs the land of Cgypt, and the farthin of the hand of Egypt, and terv them in the land of Cgypt.

44:40 And the sons of Dasaham's sarvant, and said unto Abramam, Iehold, ie and the theii

Done.

# serialize model to JSON

model_json = new_model.to_json()

with open("modelgenDNNLSTM.json", "w") as json_file:

json_file.write(model_json)

# serialize weights to HDF5

new_model.save_weights("modelgenDNNLSTM.h5")

print("Saved model to disk")

Saved model to disk